Projects

CreepyCrawler is a web crawling program that spiders through company career pages to find new job opportunities.

Given a list of companies to search, job description keywords, and database schemas, the crawler will find opportunities, filter out undesirable positions, score the remaining roles, and return an ordered list to the user.

CreepyCrawler can extract position title, ID, description, apply URL, location, and recruiter contact info.

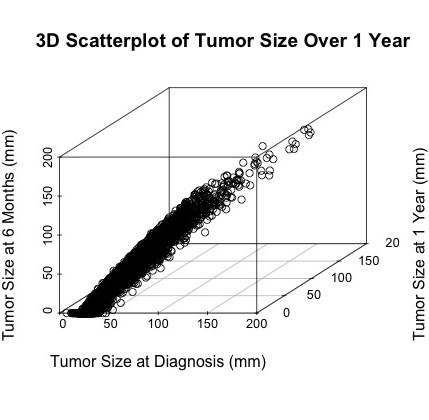

An insurance company wants to score the survival of prostate cancer patients to determine their eligibility for post-diagnosis life insurance.

Given a 31-D dataset, I first perform research on the variables to understand the data from a common-sense point of view.

Next, I verify the accuracy of the data, extract new features, explore distributions, uncover associations, and visualize relationships.

Finally, I build, train, and cross-validate regression models and Naive Bayesian classifiers to predict patient survivability.

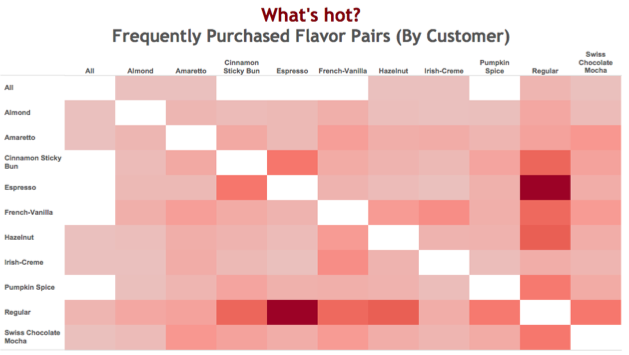

HealthWise Coffee, an online retailer of low-acid coffee, wants to better understand their product and customer bases in an effort to strengthen marketing tactics.

A teammate and I perform a visual investigation of Google Analytics data in Tableau to uncover patterns and trends in coffee sales.

We derive a set of purchasing rules that can be used to drive a more dynamic product-recommendation engine and boost sales.

UW-Madison's Engineering Hall has a messy floor layout and an inefficient evacuation plan, leading to safety concerns.

I model Engineering Hall as a network of classrooms and hallways to optimize occupant routing and minimize evactuation time.

This involves deriving, building, and debugging an algebraic model, as well as mapping the network and pulling data from building floor plans.

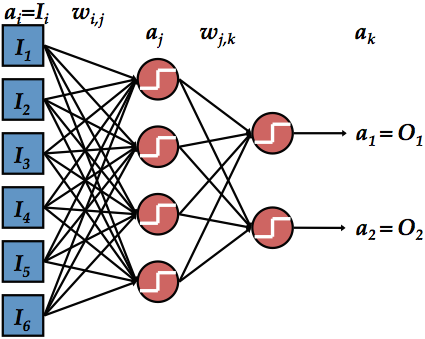

Implemented an artificial neural network to classify handwritten numeric digits.

Achieved 90% accuracy in classification through experimentation with hidden layer, learning rate, learning rule, and training/testing sets.

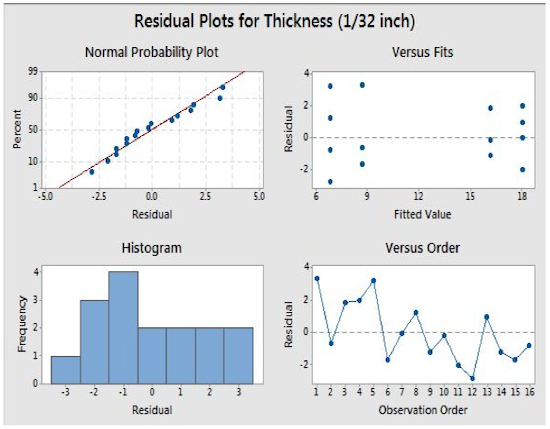

In a design of experiments course, my team and I performed a discovery experiment to determine which cooking factors have a significant effect on pancake thickness.

We design and conduct a 24 full factorial experiment to explore the effects of liquid quantity, liquid type, pancake mix brand, and cooking temperature on the thickness of a pancake.

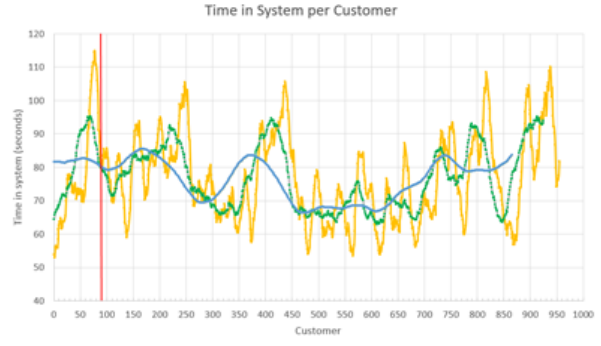

For a simulation course project, my team and I chose to build, test, and redesign a model of a concession stand queueing system to optimize resource utilization, decrease wait times, and boost sales.

We collect time data from concession queues, fit distributions to service and wait time variables, build a model in Arena, verify/validate the model, and design an alternative.

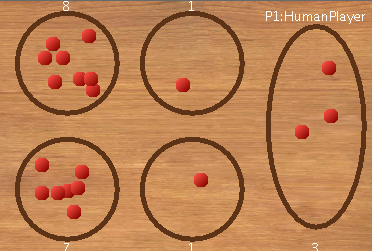

I built logic for game playing AI in the classic board game of Mancala.

My player performs a search of the game space to find the move that minimizes its opponent's maximum possible earnable points.

I perform a heuristic evaluation of move strength and prune the search tree for more accurate and efficient searching.